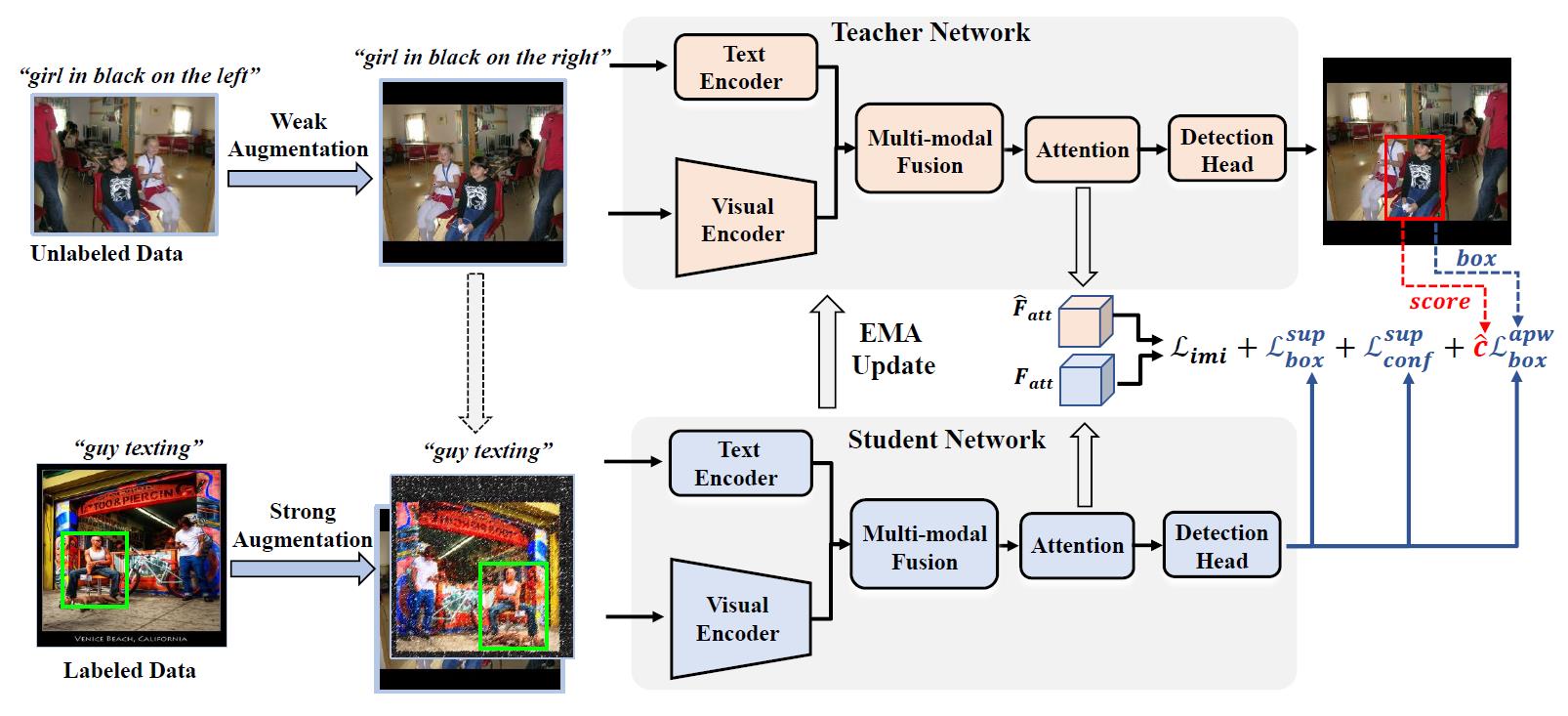

Referring expression comprehension (REC) aims to locate the target instance in an image according to a given natural language expression. Existing REC approaches often require a large number of instance-level annotations for fully supervised learning, which are laborious and expensive. In this paper, we present the first attempt of semi-supervised learning for REC and propose a strong baseline method called RefTeacher. Inspired by the recent progress in computer vision, RefTeacher adopts a teacher-student learning paradigm, where the teacher REC network predicts pseudo-labels for optimizing the student one. This paradigm allows REC models to exploit massive unlabeled data based on a small fraction of labeled information, greatly reducing the annotation costs. In particular, we also identify two key challenges in semi-supervised REC, namely, sparse supervision signals and worse pseudo-label noise. To address these issues, we equip RefTeacher with two novel designs called Attention-based Imitation Learning (AIL) and Adaptive Pseudo-label Weighting (APW). AIL can help the student network imitate the recognition behaviors of the teacher, thereby obtaining sufficient supervision signals. APW can help the model adaptively adjust the contributions of pseudo-labels with varying qualities, thus avoiding confirmation bias. To validate RefTeacher, we conduct extensive experiments on three REC benchmark datasets. Experimental results show that RefTeacher obtains obvious gains over the fully supervised methods. More importantly, using only 10% labeled data, our approach allows the model to achieve near 100% fully supervised performance, e.g., only -2.78% on RefCOCO.

There's a lot of excellent work that was introduced around the same time as ours.

RealGIN, TransVG and SimREC are the base one-stage REC models we use.

We inspire from the semi-supervised learning methods such as STAC and Unbiased Teacherv2.